Question: So, what is it that makes a vote go one way or the other?

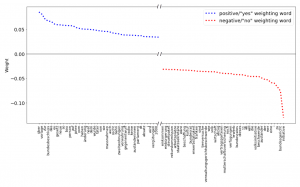

Answer: First of all, it depends on the model. In a logistic regression model (LRM) it is possible to extract and sort the words by their weight once the model has been trained. At the right side, the graph displays the 40 most weighted words for a vote to be accepted or rejected. However, there are over 50,000 words determining the outcome the prediction.

Words like ‚ Bundesbeschluss, Vorlage, Vergütungen, Massnahmen‘ (‚federal decree, bill, remuneration, measures‘) do have a positive weight whereas words like ‚ Initiative, Beschwerde, Ausländer, Wirtschaft, Franken‘ (‚popular initiative, complaint, foreigners, economy, Swiss francs‘) weight in an negative way. However, these words alone do not prohibit the outcome of the prediction being overturned as there are more than 50,000 words contributing to the model.

For the machine learning models based on neural networks it is more difficult to illustrate how the network ‚decides‘ at which side the coin is going to fall. The neural networks used are based on convolution or long short-term memory layers. These layers are working like filters and extract features /words /sequences in a way that is harder to illustrate, especially in the case of text. The neural networks are based on word embeddings. Based on these in embeddings information about the inner workings of the network can be derived. This will be explained in the next section.